TRANSPORT LEVEL

FUNCTIONALITY

The following are the services that are generally offered by the transport layer; remember that none of these services are mandatory. Consequently, for each application it is possible to choose the most suitable protocol for the purpose.

- Connection oriented service. Typically the network layer does not establish a persistent connection to the target host. The transport layer then takes care of creating a persistent connection which is then closed when it is no longer needed.

- Correct delivery order. Since packets can follow different paths within the network, there is no guarantee that the data will be delivered in the same order in which it was sent. The transport layer verifies that packets are reordered in the correct sequence on receipt before passing them to the upper layer.

- Reliable transfer. The protocol is responsible for ensuring that all data sent is received; if the network service used loses packets, the transport protocol takes care of retransmitting them to the sender in the form of corrupted files.

- Flow control. If the hosts involved in the communication have very different performances it can happen that a faster PC “floods” with data a slower one, leading to packet loss. Using flow control, a “troubled” host can ask to lower the transmission rate so that it can handle incoming information.

- Congestion Control: the protocol recognizes a state of network congestion and adjusts the transmission speed accordingly.

- Byte orientation. Instead of handling data on a packet basis, it provides the ability to see the communication as a stream of bytes, making it easier to use.

- The protocol allows several simultaneous connections to be established between the same two hosts, typically using port abstraction. In common use, different services use different communication logic ports.

The transport name for this layer can therefore be misleading as it does not implement any logical and physical data transfer mechanism on the channel (multiplexing/multiple access, addressing and switching) which the lower architectural levels obviate through the mechanisms of the particular mode of transfer adopted, but on the contrary it deals with making up for the shortcomings of the transfer functions in terms of reliability by implementing the aforementioned functions as guarantees on the transport itself, i.e. closing the circle on everything that the transport in its entirety should do and guarantee.

INTERNET TRANSPORT LEVEL

In the Internet protocol stack, the most commonly used transport protocols are TCP and UDP. TCP is the more complicated of the two and provides a connection-oriented and byte-oriented end-to-end service with correct delivery order verification, error and flow control. The name is an acronym for Transmission Control Protocol. UDP, on the other hand, is a leaner protocol and provides a datagram service, connectionless, with an error reduction mechanism, and with multiple ports. The name is an acronym for User Datagram Protocol. The relationship between transport layer and network layer is as follows:

- Network layer

- Logical communication between hosts

- Transport layer

- Logical communication between processes running on different hosts

THE UNDERLYING LEVEL

- The network layer uses IP which, as a service model, is “Best-Effort” or not reliable

- It does not ensure delivery

- It does not ensure the order

- It does not ensure integrity

- The transport layer has the task of extending the IP delivery services “between terminals” to one of delivery “between processes running on the terminal systems”.

This transition from host-to-host delivery to process-to-process delivery is called transport-layer multiplexing and demultiplexing. - TCP converts the unreliable system, IP, at the network layer into a reliable system at the transport layer.

- UDP, on the other hand, extends the unreliability of IP at the transport layer.

TRANSPORT ADDRESS

- Applications that use TCP/IP register on the transport layer at a specific address, called a port

- This mechanism allows an application to identify the remote application to which to send data.

- The port is a 16-bit number (1 to 65535; port 0 is not used).

- TCP/IP allows applications to register on an application-defined port (such as servers) or any free port chosen by the transport layer (often clients).

- To make widespread use services functional, TCP/IP requires certain services to use well-defined ports on the server side.

There is a central authority, the Internet Assigned Numbers Authority (IANA), which publishes the collection of port numbers assigned to applications in RFCs (http://www.iana.org).

SOCKET

A socket, in computing, indicates a software abstraction designed to use standard operating system and shared APIs for transmitting and receiving data across a network. It is the point where the application code of a process accesses the communication channel by means of a port, obtaining a communication between processes working on two physically separate machines. From the point of view of a programmer, a socket is a particular object on which to read and write the data to be transmitted or received. They are mainly composed of two parts:

- IP address: unique code of the machine concerned, with a size of 32 bits.

- Port number: number, with a size of 16 bits, used by the single process to request the protocol inside the machine itself.

This combination makes it possible to uniquely distinguish the individual requests within the network.

THE TCP PROTOCOL

In telecommunications and information technology, the Transmission Control Protocol (TCP) is a transport layer packet network protocol, belonging to the Internet protocol suite, which deals with transmission control, that is, making network data communication reliable between sender and recipient. Most of the Internet network applications rely on it, it is present only on the network terminals (hosts) and not on the internal switching nodes of the transport network, implemented as a network software layer within the operating system of a host , and the transmitting or receiving terminal accesses it through the use of appropriate system calls defined in the system API. TCP can be classified at the transport layer (level 4) of the OSI reference model, and is usually used in conjunction with the network layer protocol (OSI level 3) IP. In the TCP/IP model, the TCP protocol occupies layer 4, transport. In line with the dictates of the transport level established by the ISO/OSI model and with the aim of overcoming the problem of the lack of reliability and control of communication that arose with the large-scale interconnection of local networks in a single large geographical network , TCP has been designed and built to use the services offered by the lower level network protocols (IP and physical layer and datalink layer protocols) which effectively define the transfer mode on the communication channel, but which do not offer any guarantee of reliability on the delivery in terms of delay, loss and error of the transmitted information packets, on the flow control between terminals and on the control of network congestion, thus compensating for the above problems and thus building a reliable communication channel between two network application processes . The communication channel thus constructed is composed of a bidirectional stream of bytes following the establishment of a connection at the ends between the two communicating terminals. In addition, some features of TCP are vital for the overall good functioning of an IP network. From this point of view TCP can be considered as a network protocol that deals with building connections and guaranteeing reliability on an underlying IP network which is essentially of the best-effort type.

MAIN FEATURES

- TCP is a connection-oriented protocol, that is, before it can transmit data, it must establish communication, negotiating a connection between sender and recipient, which remains active even in the absence of data exchange and is explicitly closed when no longer needed. It therefore has the functionality to create, maintain and close a connection.

- TCP is a reliable protocol: it guarantees the delivery of segments to their destination through the acknowledgment mechanism.

- The service offered by TCP is the transport of a bidirectional byte stream between two applications running on different hosts. The protocol allows the two applications to transmit simultaneously in both directions, so the service can be considered “Full-duplex” even if not all application protocols based on TCP use this possibility.

- The stream of bytes produced by the application (or application, or application protocol) on the sending host, is taken over by TCP for transmission, is then divided into blocks, called segments, and delivered to the TCP on the receiving host which it will pass to the application indicated by the recipient’s port number in the segment header (eg: HTTP application, port 80).

- TCP guarantees that the transmitted data, if it reaches its destination, does so in order and only once (“at most once”). More formally, the protocol provides the higher levels with a service equivalent to a direct physical connection carrying a stream of bytes. This is accomplished through various acknowledgment and timeout retransmission mechanisms.

- TCP offers error checking functionality on incoming packets thanks to the checksum field contained in its PDU. A Protocol Data Unit (PDU) is the unit of information or packet exchanged between two peer entities in a communication protocol of a layered network architecture.

- TCP has flow control functionality between communicating terminals and congestion control on the connection, through the sliding window mechanism. This allows to optimize the use of the receive / send buffers on the two end devices (flow control) and to reduce the number of segments sent in case of network congestion.

- TCP provides a connection multiplexing service on a host, through the sender port number mechanism.

A TCP connection is point-to-point, a sender, a recipient

Multicast is not possible with TCP.

- reliable byte stream, in sequence:

- no “message boundaries”

- pipeline:

- TCP flow and congestion control define

the size of the window.

- TCP flow and congestion control define

- sending and receiving buffer

- A TCP connection consists of buffers, variables and 2 sockets towards the two end-systems.

- controlled flow:

- the sender does not overload the recipient.

COMPARISON WITH UDP

The main differences between TCP and User Datagram Protocol (UDP), the other main transport protocol in the Internet protocol suite, are:

- TCP is a connection-oriented protocol. Therefore, to establish, maintain and close a connection, it is necessary to send service segments which increase the communication overhead. Conversely, UDP is connectionless and only sends the datagrams required by the application layer; (note: packets take different names depending on the circumstances to which they refer: segments (TCP) or datagrams (UDP));

- UDP does not offer any guarantee on the reliability of the communication, or on the actual arrival of the segments, nor on their order in incoming sequence; on the contrary, TCP, through the acknowledgment and retransmission on timeout mechanisms, is able to guarantee data delivery, even if at the cost of greater overhead (visually comparable by comparing the size of the headers of the two protocols); TCP is also able to reorder the segments arriving at the recipient through a field of its header: the sequence number;

- the communication object of TCP is a stream of bytes, while that of UDP are single datagrams.

The use of the TCP protocol over UDP is, in general, preferred when it is necessary to have guarantees on the delivery of data or on the order of arrival of the various segments (as for example in the case of file transfers). On the contrary UDP is mainly used when the interaction between the two hosts is idempotent or in case there are strong constraints on the speed and the economy of resources of the network (eg streaming in real time, multiplayer video games).

TCP SEGMENT

The TCP PDU is called a segment. Each segment is normally wrapped in an IP packet, and consists of the TCP header and a payload (Payload in English), that is, the data coming from the application layer (eg: HTTP). The data contained in the header constitutes a communication channel between the sender TCP and the recipient TCP, which is used to implement the functionality of the transport layer and is not accessible to the layers of the higher levels.

A TCP segment is structured as follows:

- Source port [16 bit] – Identifies the port number on the sending host associated with the TCP connection.

- Destination port [16 bit] – Identifies the port number on the receiving host associated with the TCP connection.

- Sequence number [32 bit] – Sequence number, indicates the offset (expressed in bytes) of the beginning of the TCP segment within the complete flow, starting from the Initial Sequence Number (ISN), decided upon opening the connection.

- Acknowledgment number [32 bit] – Acknowledgment number, has meaning only if the ACK flag is set to 1, and confirms the receipt of a part of the data stream in the opposite direction, indicating the value of the next Sequence number that the sending host of the TCP segment expects to receive.

- Data offset [4 bit] – Indicates the length (in 32 bit dword) of the TCP segment header; this length can vary from 5 dwords (20 bytes) to 15 dwords (60 bytes) depending on the presence and length of the optional Options field.

- Reserved [4 bit] – Bits not used and prepared for future protocol development; they should be set to zero.

- Flags [8 bit] – Bits used for protocol control:

- CWR (Congestion Window Reduced) – if set to 1 it indicates that the source host has received a TCP segment with the ECE flag set to 1 (added to the header in RFC 3168).

- ECE [ECN (Explicit Congestion Notification) -Echo] – if set to 1 it indicates that the host supports ECN during the 3-way handshake (added to the header in RFC 3168).

- URG – if set to 1 it indicates that urgent data is present in the stream at the position (offset) indicated by the Urgent pointer field. Urgent Pointer points to the end of urgent data;

- ACK – if set to 1 it indicates that the Acknowledgment number field is valid;

- PSH – if set to 1 it indicates that the incoming data must not be buffered but immediately passed to the upper levels of the application;

- RST – if set to 1 it indicates that the connection is not valid; it is used in the event of a serious error; sometimes used in conjunction with the ACK flag for closing a connection.

- SYN – if set to 1 it indicates that the sender host of the segment wants to open a TCP connection with the recipient host and specifies the value of the Initial Sequence Number (ISN) in the Sequence number field; it is meant to synchronize the sequence numbers of the two hosts. The host that sent the SYN must wait for a SYN / ACK packet from the remote host.

- FIN – if set to 1 it indicates that the sender host of the segment wants to close the open TCP connection with the receiving host. The sender waits for confirmation from the receiver (with a FIN-ACK). At this point the connection is considered closed for half: the host that sent the FIN will no longer be able to send data, while the other host has the communication channel still available. When the other host will also send the packet with FIN set, the connection, after the relative FIN-ACK, will be considered completely closed.

- Window size [16 bit] – Indicates the size of the receiving window of the sending host, ie the number of bytes that the sender is able to accept starting from the one specified by the acknowledgment number.

- Checksum [16 bit] – Check field used to check the validity of the segment. It is obtained by making the 1’s complement of the 16-bit ones complement sum of the entire TCP header (with the checksum field set to zero), of the entire payload, with the addition of a pseudo header consisting of: source IP address ( 32bit), destination IP address (32bit), one byte of zeros, one byte indicating the protocol and two bytes indicating the length of the TCP packet (header + data).

- Urgent pointer [16 bit] – Pointer to urgent data, it has meaning only if the URG flag is set to 1 and indicates the deviation in bytes starting from the Sequence number of the urgent data byte within the stream.

- Options – Options (optional) for advanced protocol uses.

- Data – represents the payload or Payload to be transmitted, i.e. the PDU coming from the upper level.

CONNECTION

Even before the data transfer on the communication channel and the transmission control operations on the received data flow, in TCP transmission it is responsible for establishing the connection at the ends between the application processes of the communicating terminals through the definition of the socket or pair IP address, port of both sender and recipient. The purpose of the TCP connection is in any case the reservation of resources between application processes that exchange information or services between them (eg server and client). At the end of the connection, TCP carries out the phase of killing the connection. The two procedures are distinct and described below.

OPENING A CONNECTION – THREE-WAY HANDSHAKE

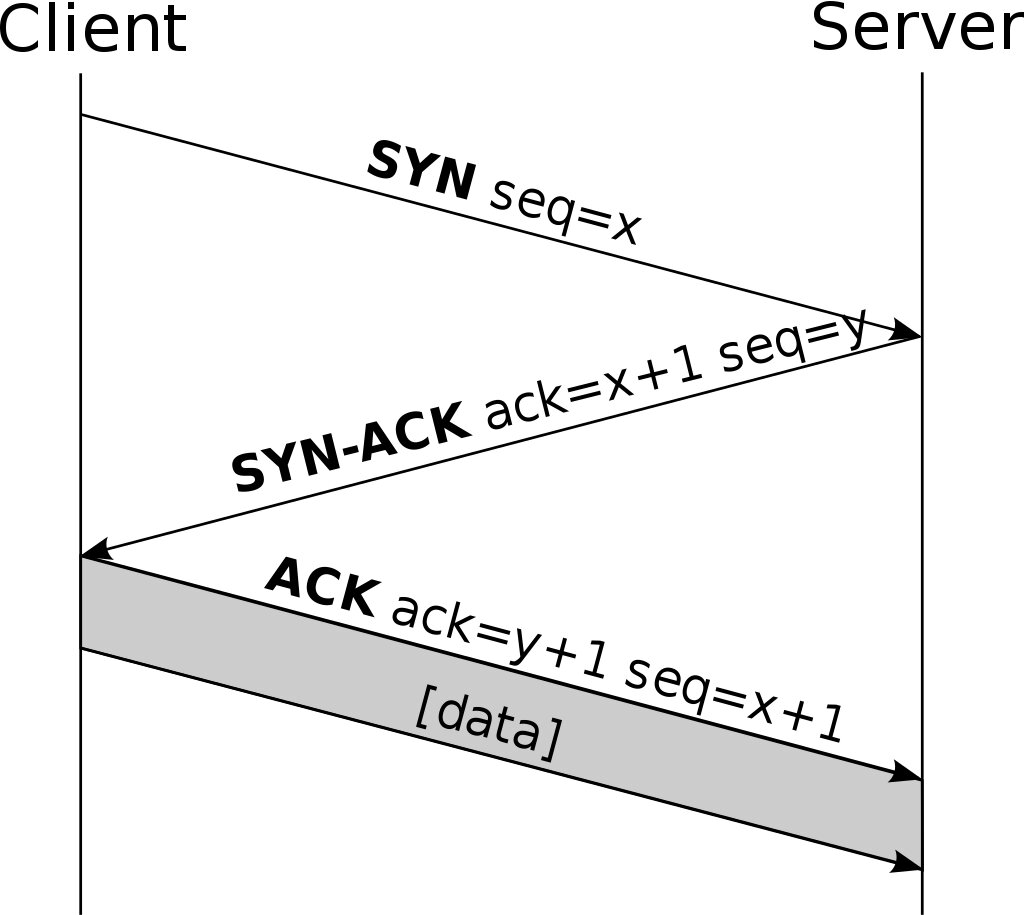

The procedure used to reliably establish a TCP connection between two hosts is called a three-way handshake, indicating the need to exchange 3 messages between the sending host and the receiving host for the connection to be established correctly. For example, consider that host A intends to open a TCP connection with host B; the steps to follow are:

- A sends a SYN segment to B – the SYN flag is set to 1 and the Sequence number field contains the value x which specifies the Initial Sequence Number of A (random number);

- B sends a SYN / ACK segment to A – the SYN and ACK flags are set to 1, the Sequence number field contains the y value which specifies the Initial Sequence Number of B and the Acknowledgment number field contains the value x + 1 confirming the receiving the ISN of A;

- A sends an ACK segment to B – the ACK flag is set to 1 and the Acknowledgment number field contains the value y + 1 confirming receipt of B’s ISN.

The third segment would not, ideally, be necessary for the opening of the connection since already after A has received the second segment, both hosts have expressed their availability to open the connection. However, it is necessary in order to also allow host B an estimate of the initial timeout, as the time elapsed between the sending of a segment and the receipt of the corresponding ACK. The SYN flag is useful in the practical implementation of the protocol, and in its analysis by firewalls: in TCP traffic the SYN segments establish new connections, while those with the inactive flag belong to connections already established. The segments used during the handshake are usually ‘header only’, ie they have the Data field empty as this is a phase of synchronization between the two hosts and not of data exchange. The three-way handshake is necessary since the numerical sequence (ISN) is not linked to a general network clock, moreover each IP packet can have its own way of calculating the Initial Sequence Number. Upon receipt of the first SYN it is not possible to know if the received sequence belongs to a delay due to a previous connection. However, the last sequence used in the connection is stored, so that the sending host may be asked to verify the SYN belonging to the old connection.

CLOSURE OF A CONNECTION – DOUBLE TWO-WAY HANDSHAKE

Once established, a TCP connection is not considered a single bi-directional connection, but rather as the interaction of two one-way connections; therefore, each party should terminate its own connection. There can also be half-closed connections, where only one of the two hosts has closed the connection because it has nothing left to transmit, but can (and must) still receive data from the other host. The connection can be closed in two ways: with a three-way handshake, in which the two parts close their respective connections at the same time, or with a four-way (or better 2 separate handshake), in which the two connections are closed. at different times. The 3-way handshake for closing the connection is the same as that used for opening the connection, with the difference that the flag used is the FIN instead of the SYN. One host sends a segment with the FIN request, the other replies with a FIN + ACK, finally the first sends the last ACK, and the entire connection is terminated.

The double 2-way handshake, on the other hand, is used when the disconnection is not simultaneous between the two communicating hosts. In this case, one of the two hosts sends the FIN request, and waits for the response ACK; the other terminal will then do the same, thus generating a total of 4 segments. A more aggressive way of closing the connection is also possible, by setting the RESET flag in the segment, interrupting the connection in both directions. The TCP receiving a RESET closes the connection by stopping any data sending.

MULTIPLATION AND DOORS

Each active TCP connection is associated with a socket opened by a process (the socket is the tool offered by the operating system to applications to use the functionality of the network). TCP takes care of sorting data between active connections and related processes. For this, each connection between two hosts is associated with a port number on each of the two hosts, which is an unsigned 16-bit integer (0-65535), contained in the appropriate header field. A TCP connection will then be identified by the IP addresses of the two hosts and the ports used on the two hosts. In this way, a server can accept connections from multiple clients simultaneously through one or more ports, a client can establish multiple connections to multiple destinations, and it is also possible for a client to simultaneously establish multiple independent connections to the same port on the same server.

RELIABILITY OF THE COMMUNICATION

The Sequence number, or sequence number, is used to identify and orderly position the payload of the TCP segment within the data stream. In fact, with the typical packet-switched transmission of the data network, each packet can follow different paths in the network and arrive out of sequence in reception. In reception, TCP expects to receive the next segment to the last segment received in order, that is, the one whose sequence number is equal to the sequence number of the last received in order, plus the size of the payload of the waiting segment ( i.e. its Date field).

In relation to the TCP sequence number, the recipient carries out the following procedures:

- if the received sequence number is the one expected, it sends the segment payload directly to the application level process and frees its buffers.

- if the received sequence number is greater than the expected one, it deduces that one or more segments preceding it have been lost, delayed by the underlying network layer or still in transit on the network. Therefore, it temporarily stores in a buffer (buffer memory) the payload of the segment received out of sequence in order to be able to deliver it to the application process only after having received and delivered all the previous segments not yet received also passing through the buffer, waiting for their arrival up to a predetermined time limit (time-out). At the moment of delivery of the ordered block of segments, all the contents of the buffer are freed. From the point of view of the application process, therefore, the data will arrive in order even if the network has for any reason altered this order thus fulfilling the requirement of orderly delivery of the data.

- If the received sequence number is lower than expected, the segment is considered a duplicate of one already received and already sent to the application layer and therefore discarded. This allows for the elimination of network duplicates.

PACKAGE TRACKING AND RETRANSMISSION

For each segment received in sequence, the receiving side TCP also sends an Acknowledgment Number or acknowledgment number of the receipt. The hit number in a segment is for the data flow in the opposite direction. In particular, the acknowledgment number sent from A (Receiver) to B is equal to the sequence number expected by A and, therefore, concerns the flow of data from B to A, a sort of report on what has been received. In particular, the TCP protocol adopts the cumulative confirmation policy, i.e. the arrival of the acknowledgment number indicates to the transmitting TCP that the receiver has received and correctly forwarded to its application process the segment having a sequence number equal to the acknowledgment number indicated (- 1) and also all the previous segments. For this reason, the transmitting side TCP temporarily keeps in a buffer a copy of all the data sent, but not yet acknowledged: when the latter receives an acknowledgment number for a certain segment, it deduces that all the segments prior to that number have been correctly received, freeing your data buffer. The maximum size of packets that can be found cumulatively is specified by the size of the so-called sliding window. To avoid waiting times that are too long or too short for each segment sent, TCP starts a timer, called retransmission timer RTO (Retransmission Time Out): if the latter does not receive an acknowledgment ACK for the segment sent before the timer expires, TCP assumes that all segments transmitted since this have been lost and then proceeds to retransmit. Note that, in TCP, the acknowledgment number mechanism does not allow the receiver to communicate to the transmitter that a segment has been lost, but some of the following ones have been received (negative Acknowledgment Number mechanism), so it is possible that for only one segment lost packet must be retransmitted many. This suboptimal behavior is compensated for by the simplicity of the protocol. This technique is called Go-Back-N (go back N segments); the alternative, that is to design the protocol in such a way that only the packets actually lost are retransmitted, is called Selective Repeat; however, the use of some fields allows the use of selective repetition. The acknowledgment numbers and the relative retransmission timers therefore allow reliable delivery to be made, i.e. to ensure that all data sent is still delivered in the event that some packets may be lost in transit through the network (error control in terms of acknowledgment transmission).

FLOW CONTROL

The reliability of communication in TCP is also guaranteed by the so-called flow control, i.e. ensuring that the data flow in transmission does not exceed the reception or storage capacity of the receiver with loss of packets and greater weight and latencies in subsequent requests for retransmission. It is implemented through the specification by the recipient of an appropriate field known as RCV_WND (reception window), a dynamic variable (i.e. dependent on the available space) which specifies the maximum number of segments that can be received by the recipient. Defined:

LastByteRead: number of the last byte in the data stream that the application process in B has read from the buffer

LastByteRcvd: number of the last byte in the data stream coming from the network that was copied to the previously allocated RcvBuffer receive buffer

then necessarily, having TCP to avoid the overflow of its own buffer, we will have:

RCV_WND = RcvBuffer – [LastByteRcvd – LastByteRead]

where obviously to deny the overflow:

RcvBuffer ≥ [LastByteRcvd – LastByteRead]

In turn, the sender will keep track of the last byte sent and the last byte for which the ACK was received so that it does not overflow the recipient’s buffer. Note how, if the reception window is empty (RCV_WND == 0), the sender will continue to send one-byte segments, in order to guarantee synchronization between sender and receiver. There are some flow control problems in TCP that occur on both the transmitter and receiver sides. These problems go by the name of Silly Window syndrome and have different effects and causes depending on the side.

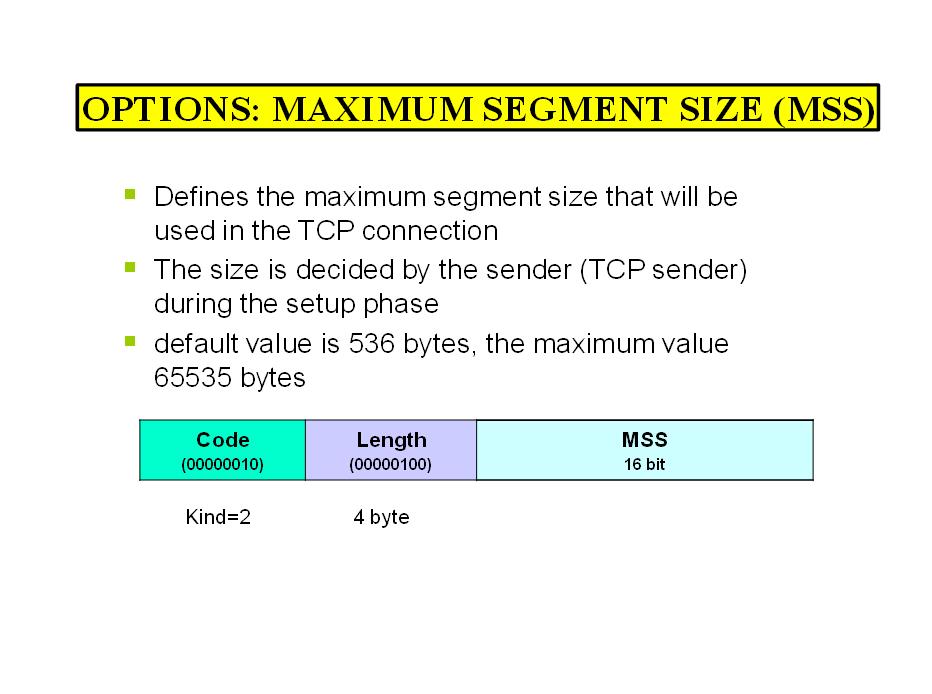

Silly Window on the receiver side: if the receiver very slowly empties the reception buffer, only when the receiver extracts information from the reception buffer is a small space free (very small Receive Window) and this value of the Receive Window is communicated back to the transmitter. the problem is that now, the transmitter uses a very narrow transmission window and therefore it may happen that it is forced to send very short segments compared to the canonical header of 20 bytes, resulting in lower transmission efficiency. To mitigate this problem, TCP makes the receiver “lie” to the transmitter indicating a null window until its reception buffer is emptied for half or for a portion at least equal to the MSS (Maximun Segment Size), avoiding so that the transmitter sends very short segments which limit the efficiency of the transmission. This solution algorithm is called the Clark algorithm.

Silly Window on the transmitter side: occurs when the application generates data very slowly. Since the TCP reads the data that is present in the transmission buffer by creating segments that it sends to the other side, in case the application generates the data very slowly the TCP entity could be forced to create very short segments ( and with a lot of overhead). The solution is called the Nagle algorithm, whereby the source TCP sends the first portion of data even if it is short, and when it is time to create new segments, these segments are created only if the output buffer contains enough data to fill an MSS or even when it receives valid feedback.

CONGESTION CONTROL

Finally, the last type of control carried out by TCP to ensure communication reliability is the so-called congestion control, i.e. ensuring that congestion phenomena within the network are limited as much as possible due to excessive traffic on network devices with packet loss. in transit and greater weight and latencies in subsequent retransmission requests, modulating over time the amount of data being transmitted as a function of the internal state of congestion. The peculiarity of this control is that it is carried out by acting on the transmission at the ends, that is, on the network terminals and not by switching inside the network thanks to the information deductible from the terminal itself on the state of packet transmission. Specifically, once the internal congestion state of the network has been “estimated”, having chosen as a reference parameter the loss of transmitted packets derived from the lack of acknowledgment ACK of the packets themselves, this control is then implemented through the definition by the sender of a appropriate field known as C_WND (congestion window) which dynamically assigns, over time, the maximum number of segments to be transmitted to the recipient.

THE UDP PROTOCOL

The User Datagram Protocol (UDP), in telecommunications, is one of the main network protocols of the Internet protocol suite. It is a packet transport layer protocol, usually used in conjunction with the IP network layer protocol.

OPERATION

Unlike TCP, UDP is a connectionless type protocol, it also does not manage the reordering of packets or the retransmission of lost ones, and is therefore generally considered to be less reliable. On the other hand, it is very fast (there is no latency for reordering and retransmission) and efficient for “light” or time-sensitive applications. It is generally used for applications for which a delayed packet has null validity, for example real-time audio-video transmission (streaming or VoIP are the most common uses), or the transmission of other information on the status of a system. , for example online multiplayer games. In fact, since real-time applications often require a minimum bit-rate of transmission, do not want to excessively delay the transmission of packets and can tolerate some data loss, the TCP service model may not be particularly suited to their characteristics. In the case of Internet telephony (VoIP), a reordered packet is useless because it dates back to a past time, while a packet not received causes the system to stall until it arrives, so that a long silence would be heard followed by all packets that were not received. arrived on time.

The UDP provides only the basic services of the transport layer, namely:

- multiplexing of connections, obtained through the port assignment mechanism;

- error checking (data integrity) using a checksum, inserted in a packet header field, while TCP also ensures reliable data transfer, flow control and congestion control.

UDP is a stateless protocol, that is, it does not keep track of the connection status and therefore has less information to store than TCP: a server dedicated to a particular application that chooses UDP as a transport protocol can therefore support many more active clients.

STRUCTURE OF A UDP DATAGRAM

Header:

- Source port [16 bit] – identifies the port number on the host of the sender of the datagram;

- Destination port [16 bit] – identifies the port number on the host of the recipient of the datagram;

- Length [16 bit] – contains the total length in bytes of the UDP datagram (header + data);

- Checksum [16 bit] – contains the control code of the datagram (header + data + pseudo-header, the latter including the source and destination IP addresses). The calculation algorithm is defined in the RFC of the protocol;

Payload:

Data – contains the message data

APPLICATIONS THAT USE UDP

Network applications that need a reliable transfer of their data obviously don’t rely on UDP, while the more flexible and time-dependent applications rely on UDP instead. Furthermore, UDP is used for broadcast communications (sending to all terminals in a local network) and multicast (sending to all terminals subscribed to a service).

Below is a list of the main Internet services and the protocols they adopt:

AI DEEPENING

The transport layer is one of the layers in the Open Systems Interconnection (OSI) and TCP/IP model that is responsible for managing end-to-end communication between two devices on a network. Its main role is to provide a reliable service for the transmission of data between applications that are on different devices, ensuring that the data arrives correctly, in order and without errors. The transport layer is above the network layer and below the session layer in the OSI model, while in the TCP/IP model it is above the Internet layer.

Main functions of the transport layer:

1. Data segmentation: Data that comes from the upper layer (session or application layer) is divided into smaller segments. This is important because networks often limit the maximum size of data packets that can be transmitted. The transport layer fragments the data into segments that can be handled by the underlying network.

2. Reliability of transmission: One of the key responsibilities of the transport layer is to ensure that data arrives correctly. The protocol can provide various error-checking mechanisms, such as checksums, to verify the integrity of the data and ensure that it is not corrupted during transfer.

3. Flow control: This mechanism allows the transport layer to manage the amount of data the sender can send before receiving feedback from the receiver, thus avoiding overloading the receiver with too much data at once.

4. Congestion control: The transport layer monitors the network to avoid congestion. If congestion occurs, it reduces the data rate to prevent further packet loss.

5. Multiplexing/demultiplexing: The transport layer allows multiple applications to use the network simultaneously by identifying each connection through logical ports. This process is called multiplexing when data from multiple applications are combined into a single data stream. Demultiplexing, on the other hand, separates these streams when they arrive at their destination.

6. Connection management: Transport layer protocols can be connection-oriented or connectionless.

-In connection-oriented protocols (e.g., TCP), the transport layer establishes a logical connection between the sender and the receiver before starting to transmit data. This implies that packets are numbered and reordered correctly if they are received out of sequence.

-In connectionless protocols (e.g., UDP), no formal connection is established and data is sent independently without checking whether it was received correctly.

Main transport layer protocols:

1. TCP (Transmission Control Protocol):

-Oriented Connection: Establishes a connection before transmitting data (through the process called three-way handshake).

-Reliability: Ensures that packets are delivered, are correct and in order.

-Congestion and flow control: Monitors the network and adjusts data transmission to avoid overload.

-Use: Is used in applications where reliability is essential, such as web browsing (HTTP/HTTPS), file transfers (FTP) and e-mail (SMTP).

2. UDP (User Datagram Protocol):

-Non-directed connection: Does not establish a connection. Packets, called datagrams, are sent without checking whether they have been received.

-Low latency: Because there is no overhead due to error checking or connection management, it is very fast and suitable for real-time applications.

-Use: It is used in applications that require speed and low latency rather than reliability, such as video/audio streaming (e.g., VoIP), online gaming, and DNS (Domain Name System).

Processes involved in the transport layer:

-Three-Way Handshake (in TCP only): This is the process by which a connection is established between two hosts:

1. The client sends a SYN packet to the server to initiate the connection.

2.The server responds with a SYN-ACK packet.

3. The client responds with an ACK packet. At this point, the connection is established.

-Finishing the connection (in TCP only): To terminate a TCP connection, the four-step process called Four-Way Handshake is used, which allows both sides to close the communication in an orderly manner.

Importance of the transport layer:

The transport layer is crucial to ensuring that data is transferred reliably between two points in the network, regardless of the physical differences between the networks connecting these points. Without a reliable transport layer, applications that require high accuracy and data integrity, such as online banking or the transmission of important files, could not function properly.

In summary, the transport layer provides the foundation for communications between applications in a network, ensuring that data transmission is handled effectively, securely, and consistently.

Leave A Comment